“A Los Angeles man who was trying to make his way to an Arizona airport in one of Waymo’s driverless taxis last month got stuck in an incredibly frustrating loop where the car just traveled in circles. The man even says he almost missed his flight back home. ” More. Watch video on the incident.

“This isn’t the first time that Waymo cars have gotten very confused and seemingly gotten stuck in loops. Back in December, auto news outlet Jalopnik reported on a Waymo vehicle that appeared to get stuck in a roundabout in Arizona, circling at least 37 times.”

“It’s like people are the experiment”, commented the victim.

People are indeed the experiment. Many modern, high-tech-dense products, like cars, are so complex, that they will always be beta-stage products, or even perpetual beta products, with new features and gizmos being added all the time. They contain an unknown number of unknown bugs and it will be up to the unfortunate customers to experiment them and, hopefully, be able to report them. But why is this the case?

In a recent blog, we discussed how the Principle of Fragility will always get in the way of supercomplex products operating in uncertain environments. Basically, if you put something very complex face to face with uncertainty, you can count on a myriad of unexpected and counter-intuitive outcomes. This boils down to one word: fragility. High complexity induces fragility. This is particularly true for perpetual beta-stage products, where the constant incorporation of new, often useless gadgets, amplifies the complexity of an already overly-complex software-electronics ecosystem. This ecosystem can never be fully tested and debugged. Even if it were possible, the costs of this would be immense. It is better to shift the risk on the shoulders of the customers. Let them find the bugs. It is a sort of a technological bail out, operated not by a government but by the public.

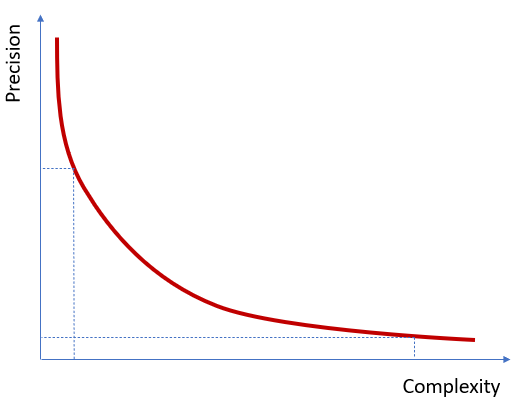

Aristotle wrote in his Nikomachean Ethics: an educated mind is distinguished by the fact that it is content with that degree of accuracy which the nature of things permits, and by the fact that it does not seek exactness where only approximation is possible.

The above is the ancient version of Zadeh’s Principle of Incompatibility (1973):

High precision is incompatible with high complexity

Another way to express the Principle is:

In a context of high complexity, precise statements are irrelevant and relevant statements are imprecise

An example of an irrelevant and precise statement: in 2055 the ocean level will rise by 5.7 centimeters.

An example of a relevant and imprecise statement: there is a high probability of rain tomorrow.

Both of these statements stem from the fact that the climate and its dynamics are insanely complex.

The plot below conveys the idea. The further you go to the right, the less precise things get. And there is nothing you can do about it.

Back to cars. The act of driving is a very precise exercise. There are numerous rules to respect, very often to the letter. Red means “stop”, not “almost stop”, or “stop if you want to”. When traffic gets complex, driving can be stressful and hazardous. High speed, just like bad weather, not to mention alcohol, can make things even more complex, but the rules remain the same. So, if traffic is complex, and cars are complex, driven by complex beta-stage software, things get very imprecise. Finding bugs and identifying their causes gets increasingly more difficult. Do you see the point?

A tragic example of what happens when you go against the Principle of Incompatibility is the MCAS system added to the Boeing 737-800 max aircraft. Pilots were not informed of its existence, to the extent that it wasn’t even mentioned in the manuals. It was up to the unfortunate passengers on Lion Air flight 610 and Ethiopian Arlines flight 302 to help debug the software.

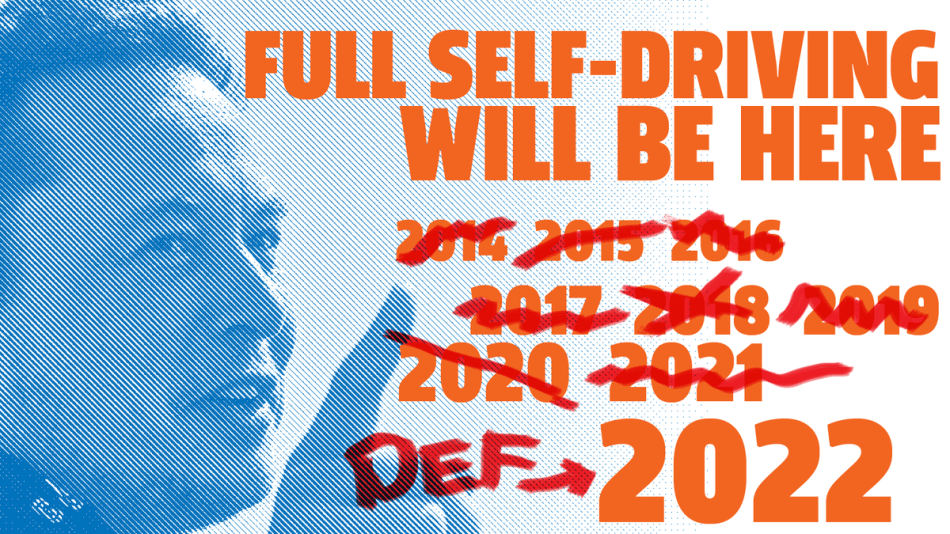

The meme below is a tribute to the Principle of Incompatibility. Two things to keep in mind: 1) Nature offers no free lunch, and 2) Nature doesn’t negotiate. So, don’t insist.

Contact us for information.

0 comments on “Self-Driving Cars and the Principle of Incompatibility”