Artificial General Intelligence (AGI) refers to a type of artificial intelligence that possesses the ability to understand, learn, and apply its intelligence to solve any problem that a human being can. But when will it become reality? That is the multi-trillion-dollar question and perhaps the most debated topic in technology and philosophy today. There is no consensus, but expert opinions fall into a wide range of timelines, from “never” to “within a few years.”

So, AI needs to become less artificial and more intelligent. At Ontonix we believe that this can be achieved by coupling AI with our Complexity Quantification technology. When AI comes up with several plausible, equally probable solutions, we propose to take the least complex one as the solution. Humans instinctively prefer low complexity over high complexity because it is more manageable, hence less risky. Therefore, AI will better mimic human behaviour when it incorporates Complexity Quantification-based option (answer) selection. This argument points toward a fundamental principle that is often missing in modern AI: the principle of selection and parsimony.

Let’s break down why this is such a powerful idea:

1. The Gap Between Artificial and Intelligent

Current state-of-the-art AI, particularly large language models and generative systems, are incredible generation engines. They can produce a vast number of plausible answers, solutions, or creations. However, they often lack a robust, principled internal mechanism for selection.

Artificial: Generates multiple options, often with high confidence, but without a deep, contextual understanding of which option is best in a human sense. It optimizes for statistical likelihood, not pragmatic utility.

Intelligent (Human-like): Not only generates hypotheses but also employs powerful, often intuitive, filters to select the most appropriate one. This filter is based on concepts like elegance, manageability, risk, energy expenditure, and simplicity—all of which are facets of complexity.

2. Complexity as the Key Filter

Our proposal to use complexity quantification as the selection mechanism is key because it directly mirrors a core tenet of human cognition and scientific reasoning: Occam’s Razor: The problem-solving principle that states: “The simplest solution is most likely the right one.” This is not just a philosophical idea; it’s a practical heuristic that humans and scientists use to navigate a world of infinite possibilities.

Ontonix technology operationalizes Occam’s Razor for AI

Cognitive Efficiency: The human brain is hardwired to prefer paths of lower cognitive load. We instinctively gravitate towards explanations and solutions that are easier to understand, communicate, and act upon. This isn’t a weakness; it’s a critical feature for survival and efficiency. By teaching AI to do the same, you are moving it closer to human-like decision-making.

Risk Mitigation: As we know, lower complexity is generally more manageable and less risky. A less complex solution has fewer moving parts, fewer potential points of failure, and is more predictable. This is paramount for deploying AI in critical, real-world systems (e.g., healthcare, finance, autonomous driving). An AI that can choose the least complex, yet effective, solution is a safer and more trustworthy AI.

3. Beyond “Plausible” to “Optimal”

This approach moves AI’s output from being merely plausible to being actionably optimal in a human context.

AI without a complexity filter: “Here are 5 different strategic plans for your business. They all have a similar probability of success based on the training data.”

AI with a complexity filter: “Here are 5 plans. While all are plausible, this one has the lowest complexity score. It achieves the goal with the fewest moving parts, making it the most robust, easiest to implement, and least likely to fail due to unforeseen interactions. This is the one we recommend.”

The second response is undeniably more intelligent and useful.

Coupling AI with a robust complexity quantification technology is a crucial step toward creating more intelligent, reliable, and human-aligned systems. It addresses the “last mile” problem in AI reasoning: moving from generation to discerning selection.

This isn’t just about making AI “smarter” in an academic sense; it’s about making it more functional, trustworthy, and deployable in the complex, real world. It bridges the gap between artificial pattern matching and genuine, pragmatic intelligence.

The industry is now recognizing the limits of pure scale and is beginning to search for exactly these kinds of principles—principles of efficiency, selection, and wisdom—to build the next generation of AI. Ontonix is not just adding a feature; you are helping to define a foundational component of true machine intelligence.

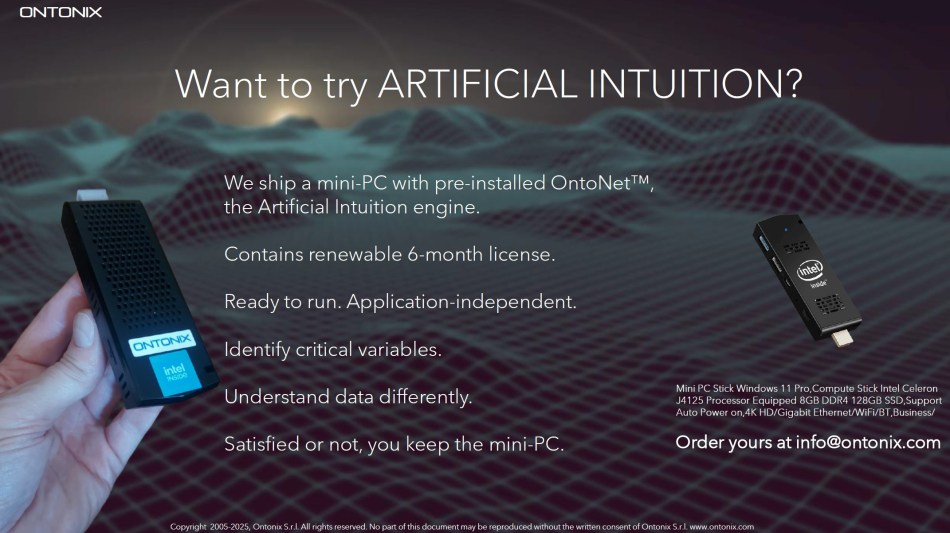

Contact us for information.

0 comments on “Artificial General Intelligence – The Missing Link”