The Principle of Incompatibility, introduced by Lotfi Zadeh in 1973, states:

“As the complexity of a system increases, our ability to make precise and yet significant statements about its behavior diminishes until a threshold is reached beyond which precision and significance (or relevance) become almost mutually exclusive characteristics.”

In simpler terms:

High precision is incompatible with high complexity

This is what it looks like. If you have something very complex, it will not behave precisely and any pecise statements about it will be irrelevant. Think of humans and human nature.

In other words: The more complex a problem is, the less meaningful precise statements become.

This principle highlights a fundamental trade-off:

- High Precision + High Significance/Relevance = Only possible for simple systems

- Complex Systems = You must choose between precision and relevance

In essence, what the principle states is that a precise solution to highly complex problems is not possible and one has to live with something that is approximate, fuzzy, or just good enough.

The Principle of Incompatibility applies to everything: climate, society, economics, engineering, human nature, or Artificial “Intelligence”.

Apple has shown in a recent paper “The Illusion of Thinking:

Understanding the Strengths and Limitations of Reasoning Models

via the Lens of Problem Complexity”, that:

“the best reasoning LLMs perform no better (or even worse) than their non-reasoning counterparts on low-complexity tasks and that their accuracy completely collapses beyond a certain problem complexity.”

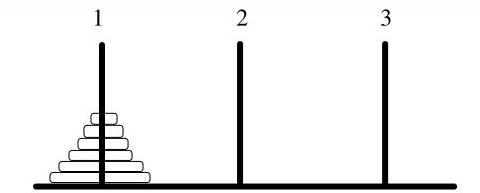

Let’s look at the Hanoi Towers problem: move all disks from needle 1 to needle 3 (or 2) by moving only one disk at a time and never placing a larger disk on top of a smaller one.

This is a problem that has only one precise solution, even though there may be various ways of obtaining it. In the above paper it is shown how LLMs collapse beyond ten disks, with accuracy falling to 0%.

From the cited paper: “As complexity increases, reasoning models initially spend more tokens while accuracy declines gradually, until a critical point where reasoning collapses—performance drops sharply and reasoning effort decreases.”

It is also stated that:

“Our detailed analysis of reasoning traces further exposed complexity-dependent reasoning patterns, from inefficient “overthinking” on simpler problems to complete failure on complex ones. These insights challenge prevailing assumptions about LLM capabilities and suggest that current approaches may be encountering fundamental barriers to generalizable reasoning.”

One such fundamental barrier emanates from the Principle of Incompatibility. Nature offers no free lunch. And it doesn’t negotiate.

The tower of Hanoi illustration (source)

0 comments on “Complexity and the Natural Limits of AI and LLMs”