The understanding, and management of risk and uncertainty is important not only in engineering, but in all spheres of social life. Due to the fact that the complexity of man-made products is quickly increasing, products are becoming exposed to risk. Risk emanates from a combination of uncertainty and complexity. High complexity induces fragility and therefore vulnerability. Examples of complex systems are: ecosystem, society, economy, power grids, traffic, sophisticated spacecraft, the internet etc. Most of these systems are adaptive, some aren’t.

Complex systems are characterized by a huge number of possible failure modes and it is a practical impossibility to analyze them all. Therefore, the alternative is to design systems that are robust, i.e. that possess built-in capacity to absorb both expected and unexpected random variations of operational conditions, without failing or compromising their function. This capacity of resilience is reflected in the fact that the system is no longer optimal, a property that is linked to a single and precisely defined operational condition, but results acceptable in a wide range of conditions. In fact, robustness and optimality are mutually exclusive. Complex systems are driven by so many interacting variables, and are designed to operate over such wide ranges of conditions, that their design must favor robustness and not optimality. A natural way of building robustness into a system is to attack complexity directly at its source. The Principle of Incompatibility, coined by L. Zadeh states that “High precision is incompatible with high complexity “. In other words, the more complex a system becomes, the more speculative will our statements about it be. It is a fact of life, almost a law of Nature. The only way to reduce the impact of complexity and uncertainty is to understand the way complex systems function, how they interact, how they respond to uncertainty and to a changing environment.

There is an on-going debate on whether computers can help generate knowledge. As we know, the oldest form of generating knowledge was via experimentation. Ever since man has started to experiment, initially without any rigor, almost accidentally, and later, thanks to Galileo, in a more or less systematic manner, he has realized that knowledge is equivalent to experience. Experience, on the other hand, is a set of conclusions drawn from repeated experiments. Experimentation by computer, that is simulation in its true sense, is instrumental towards knowledge generation. Monte Carlo Simulation (MCS) is the simplest, most universal and versatile way of doing experimentation via computing, and therefore, establishes a rigorous platform for the actual computation of knowledge.

An example of what Monte Carlo Simulation delivers – a meta-model- is illustrated below. Each point in the constellation corresponds to a single deterministic computer run, essentially an analysis in the classical sense. The shape and topology of a given meta-model depends on the physics behind a particular problem. Meta-models can be extremely complex and intricate, and carry a tremendous amount of knowledge. It is therefore clear, how a single point has limited value, regardless of how much detail is invested into its computation. Evidently, the primary objective is to explain the reasons behind a meta-model’s shape, it’s local density fluctuations, formation of clusters, underlying structure, topology, existence of isolated points (outliers), etc. It is precisely these attributes of a meta-model than constitute the true knowledge of a given system, and which can be translated into concrete engineering design decisions. Our OntoNet tool has been conceived with this very purpose in mind – to extract the hidden knowledge in complex multi-dimensional meta-models.

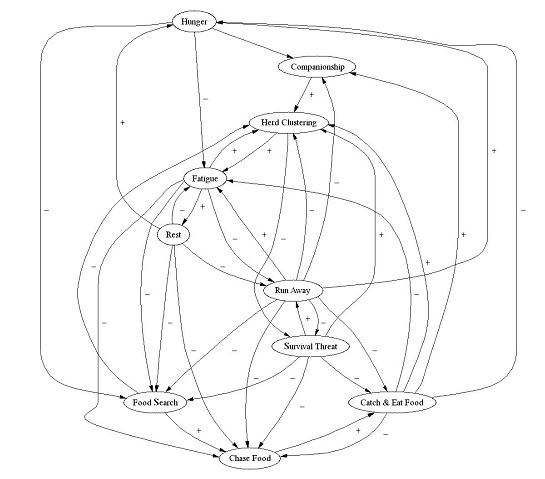

Many decision-making and problem-solving tasks are too complex to be understood quantitatively, however, on a qualitative basis it is possible to attack very complex problems by using knowledge that is imprecise rather than precise. Knowledge can be expressed in a more natural manner by using Fuzzy Cognitive Maps. The benefit of describing complex system via Cognitive Maps lies in capability to describe real-world problems, which inevitably entail some degree of imprecision and noise in the variables and parameters. Complex systems are usually composed of a number of dynamic agents, which are interrelated in intricate ways. Feedback propagates causal influences in complicated chains. Formulating a mathematical model may be difficult, costly, or even impossible. An example of a Fuzzy Cognitive Map of a dolphin is depicted below. In a Fuzzy Cognitive Map, variable concepts or sub-components are represented via nodes. The graph’s edges are the casual influences between the concepts/components. The value of a node reflects the degree to which the concept is active in the system at a particular time. Cognitive Maps prove invaluable in engineering, as they enable one to comprehend the information flow within a system and to appreciate how components interact. Today, with the techniques developed at Ontonix, it is possible to automatically generate Cognitive Maps, instead of constructing them manually, based on subjective knowledge, as has been done in the past.

Below, an example of Cognitive Map of an engineering system is depicted. The rectangles represent design quantities/components, while the ellipses correspond to performance descriptors. It is evident how, in the case in question, the information flow within the system is intricate, and how most of the quantities affect each other both directly and indirectly.

The map has been computed based on the results of a Monte Carlo Simulation. Fuzzy cognitive maps are dynamic entities. When the value of a certain node changes – for example due to a changing environment – the map changes topology. Certain links between nodes vanish, other links might appear. It is clear that cognitive maps can provide invaluable insight into a systems state and functioning. This is precisely what OntoNet does – it finds all the states (cognitive maps) in which a system – or at least a model of a system – may find itself functioning. All that is required to accomplish this is a computer model of the system, a Monte Carlo simulation environment, which can be used to obtain the corresponding meta-model, and OntoNet, to determine the cognitive maps of the system. But OntoNet does more than that. It can also pinpoint outliers and any pathological behavior, such as butterfly effects, clustering and bifurcations, and help understand how to avoid them.

OntoNet is innovative technology – in addition to allowing one to generate all the possible modes of functioning that a computer model can exhibit, it actually provides a measure the amount of complexity in a system, and provides means for designing systems with complexity in mind. Complexity provides a new and powerful means of understanding and describing systems from a holistic perspective. Which systems? For example these:

• Stock market

• Power grids

• Bio data

• Traffic data

• Any systems which can be modeled on a computer

OntoNet supports also innovative robustness measures. A popular established but essentially incorrect measure of robustness is the spread of performance. Essentially, systems in which the scatter of performance is substantial are said to be non-robust, while those in which performance scatter is small are said to be robust. However, scatter is a measure of quality, not robustness. Cognitive Map technology and complexity measures can be used to define new and more rigorous robustness measures, which in turn can be used to better understand and design systems. A holistic approach to system design is possible if holistic measures and system attributes are available. Take for example the stock market. Descriptors, such as the popular NASDAQ, or DJIA, or market volatility, are global attributes, which don’t reflect the structure of the information they convey. They are simple, succinct, but that’s all. Historical stock data, on the other hand, is just the opposite – huge volumes of data which provides fine-grain information but which, again, gives no flavor of the hidden and dynamic structure of the market. A metric is necessary which can reflect structure and organization if one really wants to understand what is going on. With OntoNet we don’t impose models. Models filter information, depending on how they have been built. First order, second order? These decisions ultimately impact the value of the model. We make no assumptions – we use model-free approaches to understand systems – essentially raw and unfiltered data. Assumptions have to be tested, and this is additional cost.

When complexity changes, and in particular the structure and topology of its complexity metric, one can be sure that something is happening. Slow and small changes in many autonomous agents may pass unobserved, but when they combine, macroscopic events may take place. We can track these changes and help understand what is going on. Combined effects of many stall changes can often be surprising. Simple statistical models will miss them.

But why is complexity so important? The answer lies in a very simple equation:

Complexity X Uncertainty = Fragility

While uncertainty is something we have to live with, an intrinsic property of our environment, complexity is often human-induced. Think of earthquakes, wind gusts, wave-induced loading, etc. These we cannot control. Therefore, in order to limit the fragility or vulnerability of a system, it is complexity that must be controlled. This implies first of all, that one understands complexity. Evidently, this requires a practical means of measuring and quantifying it. With complexity-based techniques it is possible to rigorously build-in failure into the design process and into the business model. “Faster Better is Cheaper, if Safer”. This is the new philosophy in aerospace system design that has taken over the “Faster, Better and Cheaper”, which has lead to the loss of many recently launched spacecraft. But safer is not just small probabilities of failure. Probability of failure is, again, a simple piece of information, which conveys no indication as to how imminent failure is. It has no structure, and is based on assumed failure modes (once again, assumptions must be made and verified). But how can we do this credibly in a real and complex system exposed to butterfly effects? Clearly, new methods are necessary. These new methods are available today, and are a blend of stochastic simulation technology (essentially Monte Carlo simulation), and complexity management tools, such as OntoNet. This is shown in the figure below. While in the past there have been two strategies to reduce the probability of failure of a system:

• Strategy 1: increase the factor of safety (redesign, which increases cost)

• Strategy 2: reduce variability (impose more stringent tolerance, increasing cost)

We can now propose a new strategy, namely

• Strategy 3: reduce complexity (which decreases the system’s intrinsic fragility)

While stochastic methods have been around for 10-15 years – the first commercial tools have started to appear in the second half of the 1990s. However, even though prestigious car manufactures have adopted these technologies, their take-up hasn’t been spectacular. Why? Essentially due to the lack of post-processing tools, eliminating the need to plough through hundreds of pie-charts, scatter plots, histograms and CDFs, not to mention sensitivities. OntoNet provides, for the first time, a post-processing environment that can extract real and practical knowledge from the results of a Monte Carlo simulation – knowledge that can be used to take concrete actions and steps.

The OntoNet technology, however, is not just a post-processor environment. Complexity can be tracked and used as a health monitoring aid for a multitude of systems. Applications range from power grids, to battle management, nuclear power plants, the stock market, etc. Understanding the structure of complexity can help to better understand the nature and functioning of such systems and to detect anomalies that can only be spotted if the system is viewed from a holistic perspective. It must be mentioned, however, that only endogenous phenomena may be detected and tracked. No software will reveal that lightning will strike a transformer in a power gird, causing a power outage.

Today, as systems become more complex and costly, it is often important to pay more attention to risk avoidance than to pure performance. The obsession with achieving peak performance and specialization increases the system’s vulnerability and exposes it to the adverse effects of an uncertain environment. Clearly, it becomes necessary to relax performance in order to reduce risk and to gain robustness, but to do this in a holistic and controlled manner, complexity must be known. Ultimately, complexity must be managed. However, managing complexity means, ultimately, understanding the relationships and rules that govern the system’s functioning. OntoNet determines the relationships between system variables in the vicinity of attractors that are found in multi-dimensional meta-models. These attractors correspond to the possible states, or modes, in which the system may function. The groups of relationships, or rules, that OntoGen finds in every mode, are precisely the previously mentioned Fuzzy Cognitive Maps. But why, one may ask, do we resort to fuzzy measures. Well, it is because of the Principle of Incompatibility. This states that as the number of components in a system increases, if the system happens to function in an uncertain environment, the less precise statements will we be able to make about this system. In other words, such statements will become more and more speculative. Complex systems are fuzzy. Consider, for example, the economy, climate, ecosphere, traffic in a large city, a complex battle scenario, a large power grid, or the society. For none of these systems crisp statements can be made, not to mention predictions as to future states of functioning. These systems are fuzzy, and the more complex they become, the more fuzzy they get. Another good reason to treat systems as fuzzy is because models are only models, and often carry a large number of assumptions, which, ultimately, add uncertainty to the problem, Therefore, models cannot provide precise answers. However, a great deal may still be learnt about such systems, providing that we approach them from a holistic perspective, and that we try to understand the structure of their complexity. This, today, is possible.

When a system transitions from one functioning mode to another – imagine a power grid in which a certain element fails – the relationships between the remaining elements change, just as the key elements (hubs), the critical paths, the level of redundancy and other important system features do. How these feature changes affect overall system performance may be understood if one takes a global complexity-oriented look at things. But complexity is not just about a large number of components. It is primarily about how these components interact, and about the topology of these interactions.

The blackout that struck a large area of North America on Aug. 14, 2003 provided a demonstration that one event can disrupt the supply of electricity to many places hundreds of miles apart. Mr. Kyle Datta stated, in a memorandum of the Rocky Mountains Institute, following the August 14 power outage in the Eastern United States: “In 1996, western United States lost power because a power line heated up, sagged, and shorted out. In 1998, there were two noteworthy power failures: ice storms knocked out power systems in eastern Canada and the United States, and the city of Auckland, New Zealand lost power for over two months due to a transmission line failure. Thursday’s power loss appears to be from a strike of lightning. RMI has warned of the weakness of the grid for years, notably via the Institute’s founders’ 1982 book Brittle Power: Energy Strategy for National Security, which describes the vulnerability of the North American electric grid to attack, accident, and natural disaster…. Today’s power system consists of relatively few and large units of generation and transmission, sparsely interconnected and heavily dependent on a few critical nodes (many of which are nearing capacity). The system provides relatively little storage to buffer surges and shortages and generation units are located in clusters, remote from the loads they serve. Even a weather-induced surge of electricity or a failure of a major power plant or transmission line can cascade through the system and cause massive technical, social, public health, and national security problems—as they did on Aug. 14. Our electrical system, as we predicted over twenty years ago, is and remains extremely brittle…. The interesting challenge is not understanding why the centralized system failed once again, said Datta, but to understand which elements of the system are able to maintain electricity supply in the face of overall system failure. “ This kind of understanding may be provided by OntoNet.

It has been shown recently that optimality produces fragility. Our message is that managing complexity can lead to better and intrinsically more robust designs than has ever been possible. Pursuing peak performance, while overlooking the holistic aspects of complex systems is not going to be the future paradigm in system analysis or design. In the past, Monte Carlo Simulation has been used in a somewhat traditional manner: computing CDFs, probabilities of failure, computation of multi-dimensional integrals, differential equations, etc. With OntoNet, we’ve found a new way of using this powerful and versatile simulation technology: generation of Fuzzy Cognitive Maps and manage system’s complexity. A totally new way of putting computers to work.

0 comments on “Management of Complexity: New Means of Understanding Risk and Failure”