The technology developed by Ontonix is particularly relevant in today’s world, where the complexity of systems and manufacturing processes is increasing due to technological advancements, globalization, and interconnectedness. This increasing complexity is becoming a new and insidious source of risk. Complexity-induced risk cannot be understood and countered with traditional means due to one key reason:

highly complex system can fail in countless ways

Very often, these are far from intuitive. One cannot prepare for the unexpected. The recent blackout in Spain, one of the worst ever in Europe, is an eloquent example.

In a recent article one may read: “What causes power outages? Power outages can have several triggers, including natural disasters and extreme weather, human-caused disasters, equipment failures, overloading transformers and wires and so on.” Surprisingly, high complexity of the grid is not mentioned at all! If blackouts were a matter of transformers and wires, one would imagine that the issue could have been solved with sound engineering. And yet, power outages continue to occur. In February 2025 a huge outage took place in Chile, affecting 90% of the population. Since 1999, on average one major outage per year has been registered, see list.

Ontonix’s concept of Artificial Intuition is an innovative approach that enables to “intuitively” understand and respond to complex, dynamic environments. It is designed to mimic human-like intuition in monitoring and decision-making processes, particularly in situations where traditional data-driven methods fall short due to the overwhelming complexity or lack of clear patterns.

The dynamics of complex systems can be overwhelming. They are rarely in equilibrium. The behaviour cannot be extrapolated from the behaviour of their components. Their dynamics is nonlinear, often containing elements of randomness, chaos, bifurcations, attractors, period-doubling, limit cycles, multistability, solitons, etc.

Complexity is a new systemic descriptor of this type of dynamics and, captures the intensity of Structure <-> Entropy transformations, which take place in all physical processes. This new quantity takes into account all data channels involved in a monitoring process, as well as all the possible interdependencies between those channels. In large systems, the number of such interdependencies can be overwhelming. It is impossible to understand them all, and yet they are the reason why complex systems can fail in so many ways and why, very often, it is impossible to understand why.

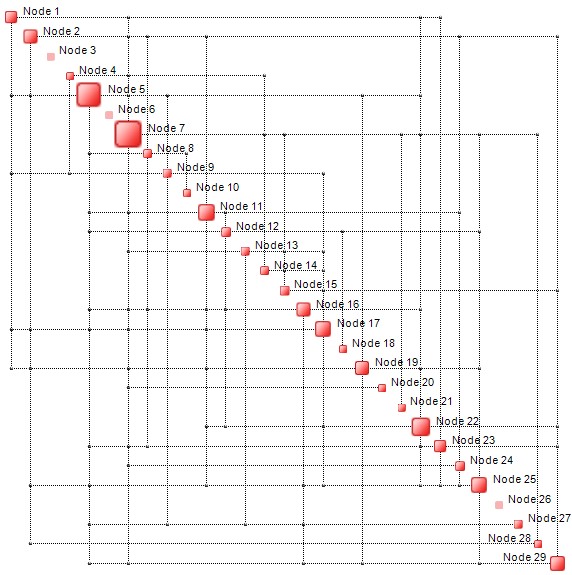

Below is an example of a Complexity Map of small network – 29 nodes, 87 interdependencies (these are not necessarily physical connections) – ‘photographed’ at a particular instant in time.

Should the network collapse, performing a digital autopsy is not so difficult. There are two clearly dominant nodes – 5 and 7 – which is where one would go first in order to determine what went wrong. In the next example below there are 223 nodes and 1451 interdependencies. Here too, a few dominant nodes can be spotted, where one would investigate possible causes of trouble.

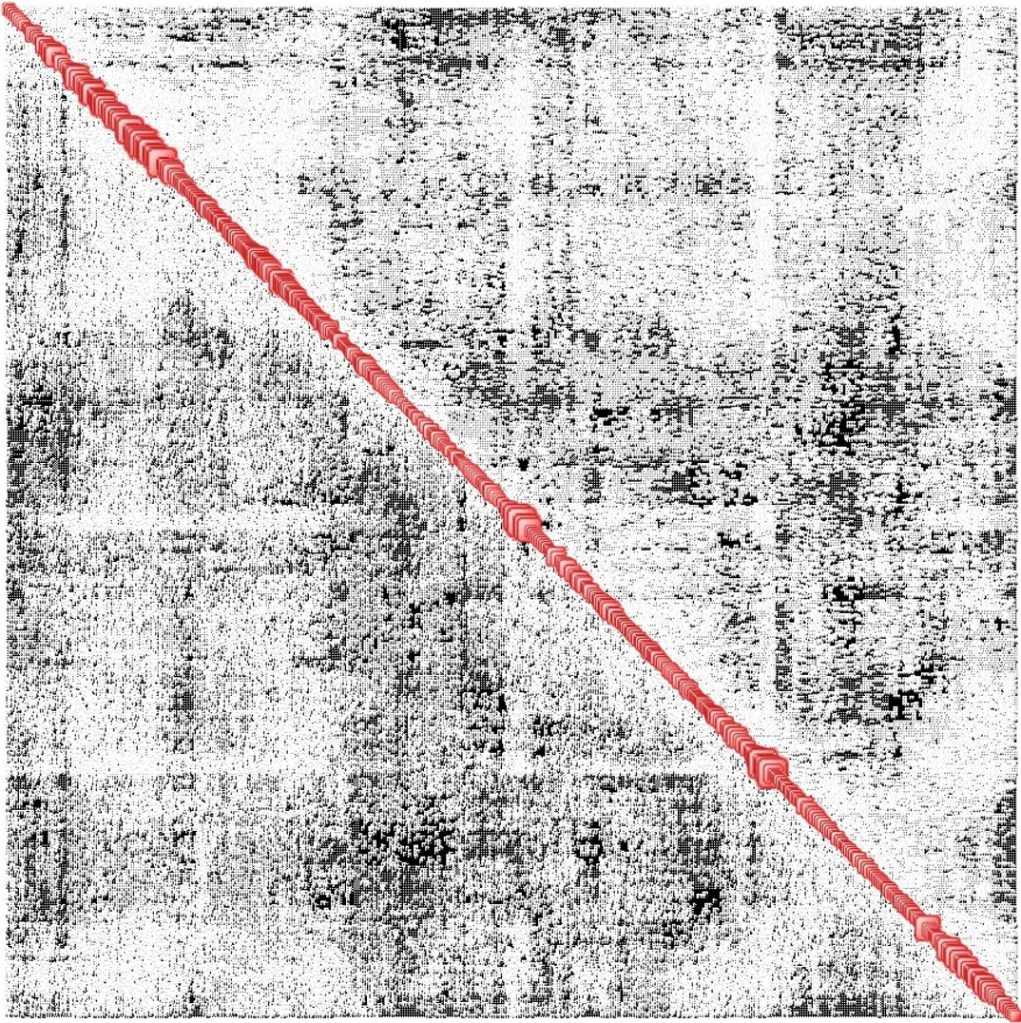

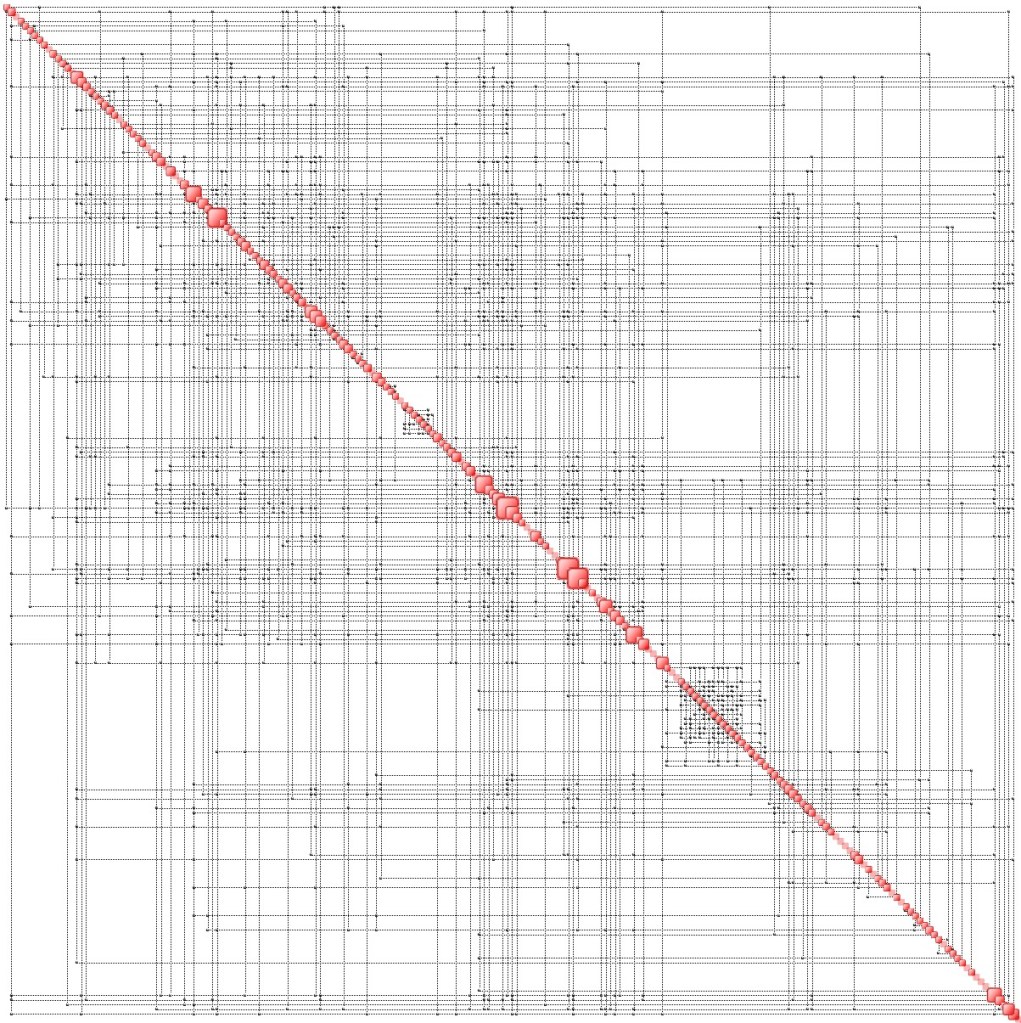

In the last case, the situation is dramatic, to say the least. There are 1305 nodes and 210 000 interdependencies. The number of dominant nodes is now large. And keep in mind that this is just a snapshot at a given moment in time. All of these maps change over time.

A large electrical grid can have anywhere from thousands to hundreds of thousands of nodes, depending on its size and complexity. Here’s a breakdown:

- Transmission-Level Grid (High Voltage):

- Typically has thousands of nodes (substations, generators, and major load centers).

- Example: The U.S. Eastern Interconnection has over 60,000 transmission nodes.

- Distribution-Level Grid (Medium/Low Voltage):

- Includes millions of nodes (transformers, feeders, and end-users).

- A single city’s distribution network may have tens of thousands of nodes.

- Entire National/Regional Grid:

- Large interconnected systems (like Europe’s ENTSO-E or China’s State Grid) can have hundreds of thousands to millions of nodes when including both transmission and distribution.

For power flow studies, transmission grids are often modeled with 5,000–100,000 nodes, while full distribution networks can exceed millions. Get the drift?

John Gall’s Laws of Systemantics are not what one would call science, yet they capture the essence and nature of complex systems. We cite a few, just for fun:

- The Fundamental Theorem: New systems generate new problems.

- Laws of Growth: Systems tend to grow, and as they grow, they encroach.

- The Generalized Uncertainty Principle: Systems display antics. (Complicated systems produce unexpected outcomes. The total behaviour of large systems cannot be predicted.)

- A complex system that works is invariably found to have evolved from a simple system that works.

- The Functional Indeterminacy Theorem (F.I.T.): In complex systems, malfunction and even total non-function may not be detectable for long periods, if ever.

- The Fundamental Failure-Mode Theorem (F.F.T.): Complex systems usually operate in failure mode.

- A complex system can fail in an infinite number of ways. If anything can go wrong, it will.

- The mode of failure of a complex system cannot ordinarily be predicted from its structure.

- The crucial variables are discovered by accident.

- The larger the system, the greater the probability of unexpected failure.

- The Fail-Safe Theorem: When a Fail-Safe system fails, it fails by failing to fail safe.

- Complex systems tend to produce complex response (not solutions) to problems.

- Great advances are not produced by systems designed to produce great advances.

- Colossal systems foster colossal errors.

We point out two:

A complex system can fail in an infinite number of ways

and

The crucial variables are discovered by accident

The bottom line is that super-complex critical infrastructures cannot be monitored and managed using inadequate analytical techniques, which neglect complexity, their hallmark. To prevent the collapse of complex systems their complexity – cause of their inherent fragility – must be considered.

In Essence

Quantitative Complexity Management by Ontonix bridges the gap between complexity science and practical decision-making. By transforming complexity into actionable metrics, organizations can preemptively address systemic risks, optimize performance, and build resilient systems. In an era defined by interconnectedness and volatility, QCM offers a roadmap to navigate—and master—the chaos of modern systems.

Conclusion

Complexity-Induced Risk is an inherent challenge in modern systems, where sophistication often comes at the cost of reduced stability and fragility. As systems grow more intertwined—from AI-driven economies to software-heavy products—understanding and mitigating complexity-induced risks will be critical to sustainable progress.

As the adage goes: “Complex systems tend to fail in complex ways.” Recognizing this truth is the first step toward building resilience and sustainability in an increasingly interconnected world.

Contact us for information.

Such a wonderful post once again! As someone fortunate enough to be trained and mentored as a complex systems analyst by the author, I’ve found that the biggest benefit of Quantitative Complexity Management (QCM) is its ability to uncover crucial variables driving complexity in complex systems early on. These complexities often surprisingly rewrite the narrative of complex systems. They’re so critical that analysts and decision-makers mustn’t leave their discovery to chance. Ontonix provides timely clarity on these leverages.

LikeLike

Pingback: The Spanish Blackout and Who is Next? – Artificial Intuition